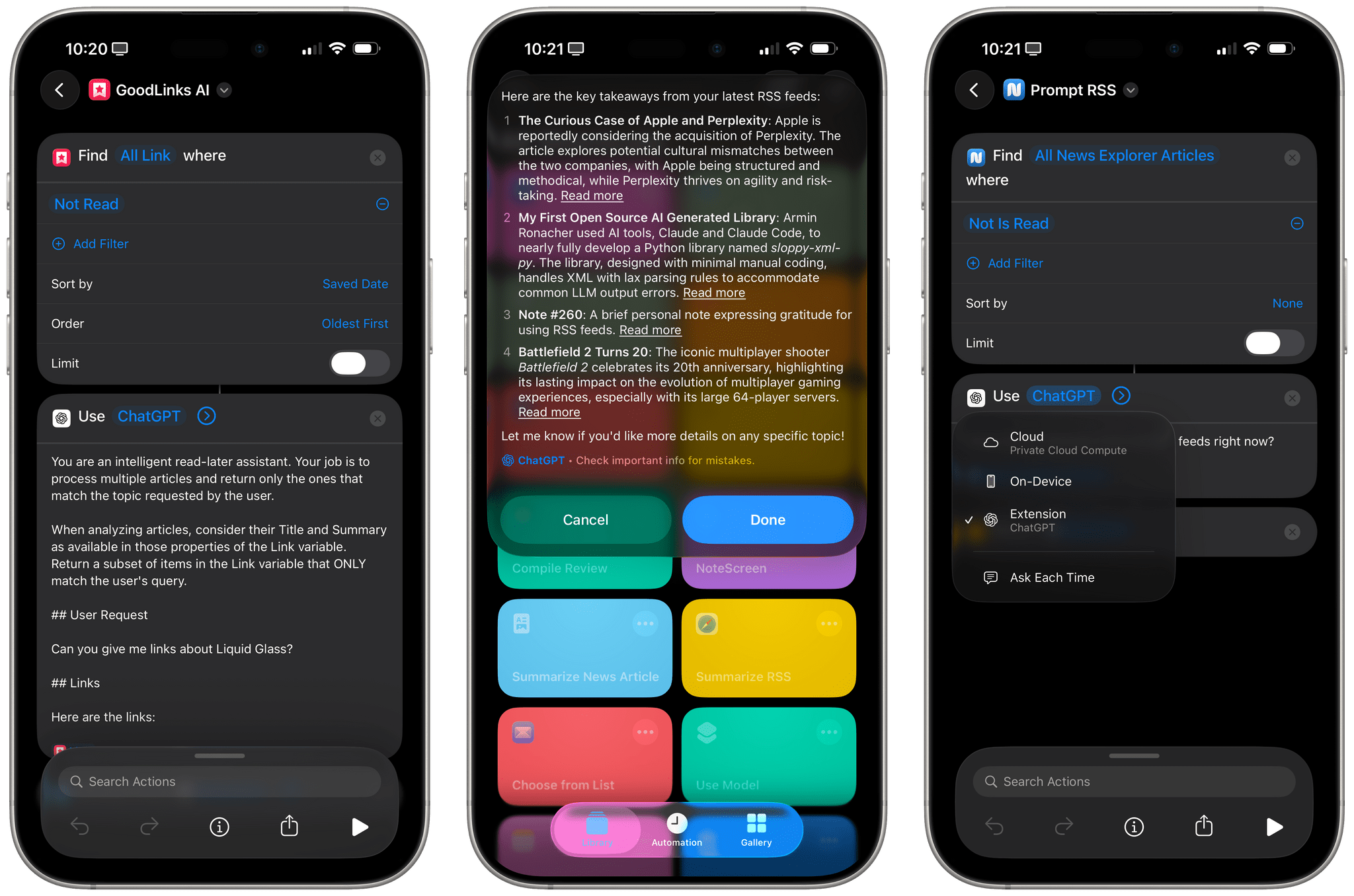

I mentioned this on AppStories during the week of WWDC: I think Apple’s new ‘Use Model’ action in Shortcuts for iOS/iPadOS/macOS 26, which lets you prompt either the local or cloud-based Apple Foundation models, is Apple Intelligence’s best and most exciting new feature for power users this year. This blog post is a way for me to better explain why as well as publicly investigate some aspects of the updated Foundation models that I don’t fully understand yet.

Posts tagged with "AI"

I Have Many Questions About Apple’s Updated Foundation Models and the (Great) ‘Use Model’ Action in Shortcuts

Hands-On: How Apple’s New Speech APIs Outpace Whisper for Lightning-Fast Transcription

Late last Tuesday night, after watching F1: The Movie at the Steve Jobs Theater, I was driving back from dropping Federico off at his hotel when I got a text:

Can you pick me up?

It was from my son Finn, who had spent the evening nearby and was stalking me in Find My. Of course, I swung by and picked him up, and we headed back to our hotel in Cupertino.

On the way, Finn filled me in on a new class in Apple’s Speech framework called SpeechAnalyzer and its SpeechTranscriber module. Both the class and module are part of Apple’s OS betas that were released to developers last week at WWDC. My ears perked up immediately when he told me that he’d tested SpeechAnalyzer and SpeechTranscriber and was impressed with how fast and accurate they were.

It’s still early days for these technologies, but I’m here to tell you that their speed alone is a game changer for anyone who uses voice transcription to create text from lectures, podcasts, YouTube videos, and more. That’s something I do multiple times every week for AppStories, NPC, and Unwind, generating transcripts that I upload to YouTube because the site’s built-in transcription isn’t very good.

What’s frustrated me with other tools is how slow they are. Most are built on Whisper, OpenAI’s open source speech-to-text model, which was released in 2022. It’s cheap at under a penny per one million tokens, but isn’t fast, which is frustrating when you’re in the final steps of a YouTube workflow.

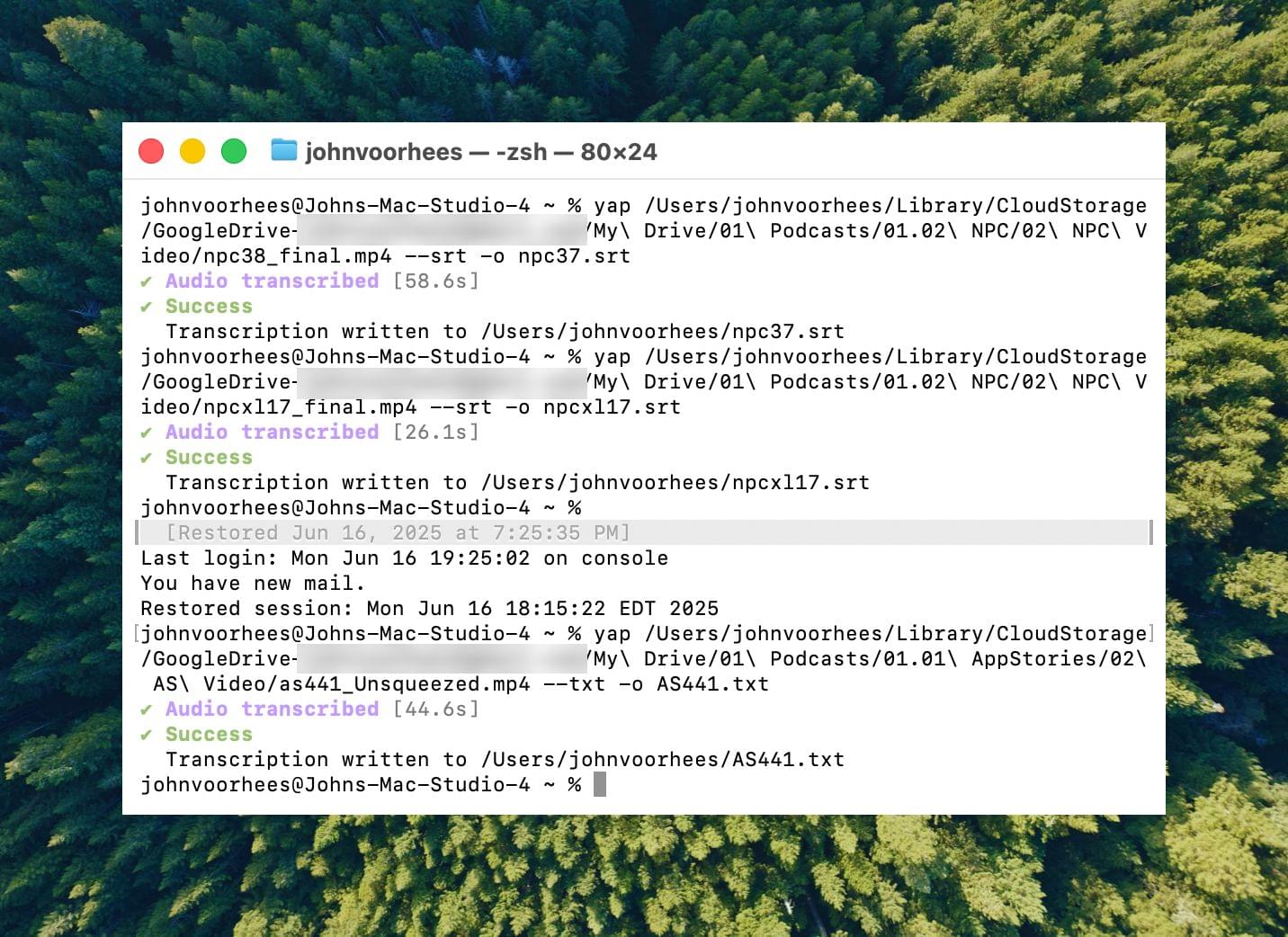

I asked Finn what it would take to build a command line tool to transcribe video and audio files with SpeechAnalyzer and SpeechTranscriber. He figured it would only take about 10 minutes, and he wasn’t far off. In the end, it took me longer to get around to installing macOS Tahoe after WWDC than it took Finn to build Yap, a simple command line utility that takes audio and video files as input and outputs SRT- and TXT-formatted transcripts.

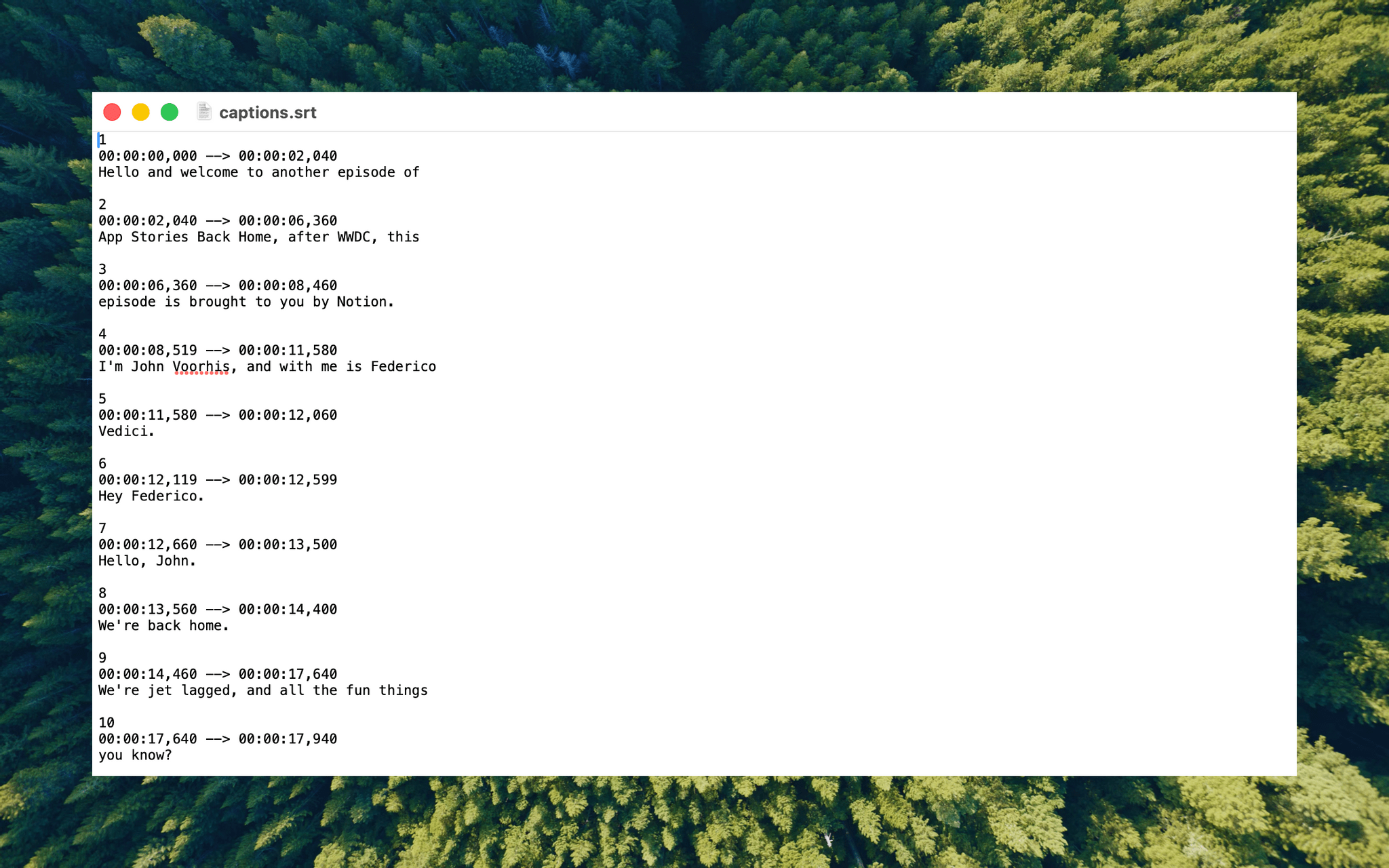

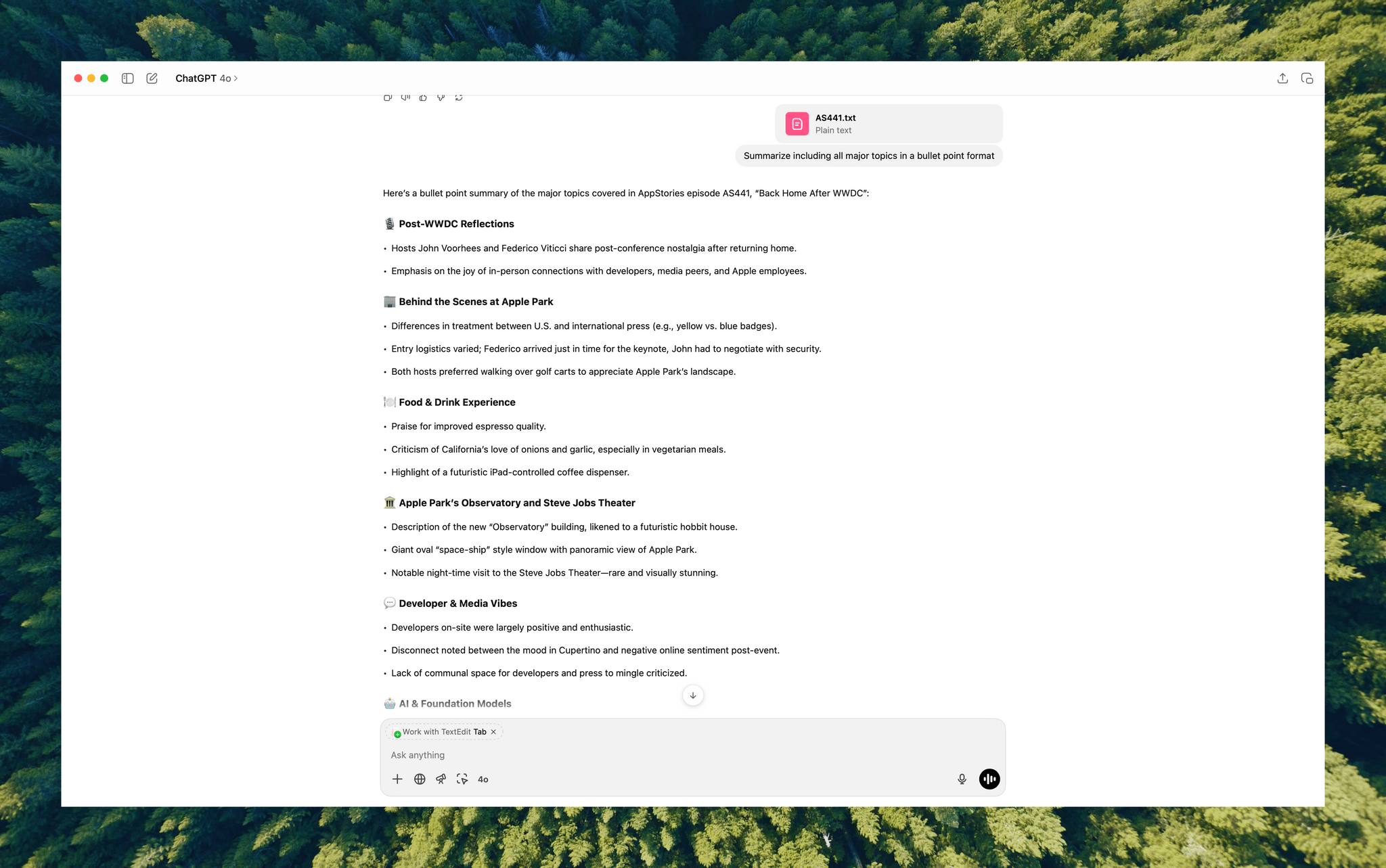

Yesterday, I finally took the Tahoe plunge and immediately installed Yap. I grabbed the 7GB 4K video version of AppStories episode 441, which is about 34 minutes long, and ran it through Yap. It took just 45 seconds to generate an SRT file. Here’s Yap ripping through nearly 20% of an episode of NPC in 10 seconds:

Next, I ran the same file through VidCap and MacWhisper, using its V2 Large and V3 Turbo models. Here’s how each app and model did:

| App | Transcripiton Time |

|---|---|

| Yap | 0:45 |

| MacWhisper (Large V3 Turbo) | 1:41 |

| VidCap | 1:55 |

| MacWhisper (Large V2) | 3:55 |

All three transcription workflows had similar trouble with last names and words like “AppStories,” which LLMs tend to separate into two words instead of camel casing. That’s easily fixed by running a set of find and replace rules, although I’d love to feed those corrections back into the model itself for future transcriptions.

What stood out above all else was Yap’s speed. By harnessing SpeechAnalyzer and SpeechTranscriber on-device, the command line tool tore through the 7GB video file a full 2.2× faster than MacWhisper’s Large V3 Turbo model, with no noticeable difference in transcription quality.

At first blush, the difference between 0:45 and 1:41 may seem insignificant, and it arguably is, but those are the results for just one 34-minute video. Extrapolate that to running Yap against the hours of Apple Developer videos released on YouTube with the help of yt-dlp, and suddenly, you’re talking about a significant amount of time. Like all automation, picking up a 2.2× speed gain one video or audio clip at a time, multiple times each week, adds up quickly.

Whether you’re producing video for YouTube and need subtitles, generating transcripts to summarize lectures at school, or doing something else, SpeechAnalyzer and SpeechTranscriber – available across the iPhone, iPad, Mac, and Vision Pro – mark a significant leap forward in transcription speed without compromising on quality. I fully expect this combination to replace Whisper as the default transcription model for transcription apps on Apple platforms.

To test Apple’s new model, install the macOS Tahoe beta, which currently requires an Apple developer account, and then install Yap from its GitHub page.

Testing DeepSeek R1-0528 on the M3 Ultra Mac Studio and Installing Local GGUF Models with Ollama on macOS

DeepSeek released an updated version of their popular R1 reasoning model (version 0528) with – according to the company – increased benchmark performance, reduced hallucinations, and native support for function calling and JSON output. Early tests from Artificial Analysis report a nice bump in performance, putting it behind OpenAI’s o3 and o4-mini-high in their Intelligence Index benchmarks. The model is available in the official DeepSeek API, and open weights have been distributed on Hugging Face. I downloaded different quantized versions of the full model on my M3 Ultra Mac Studio, and here are some notes on how it went.

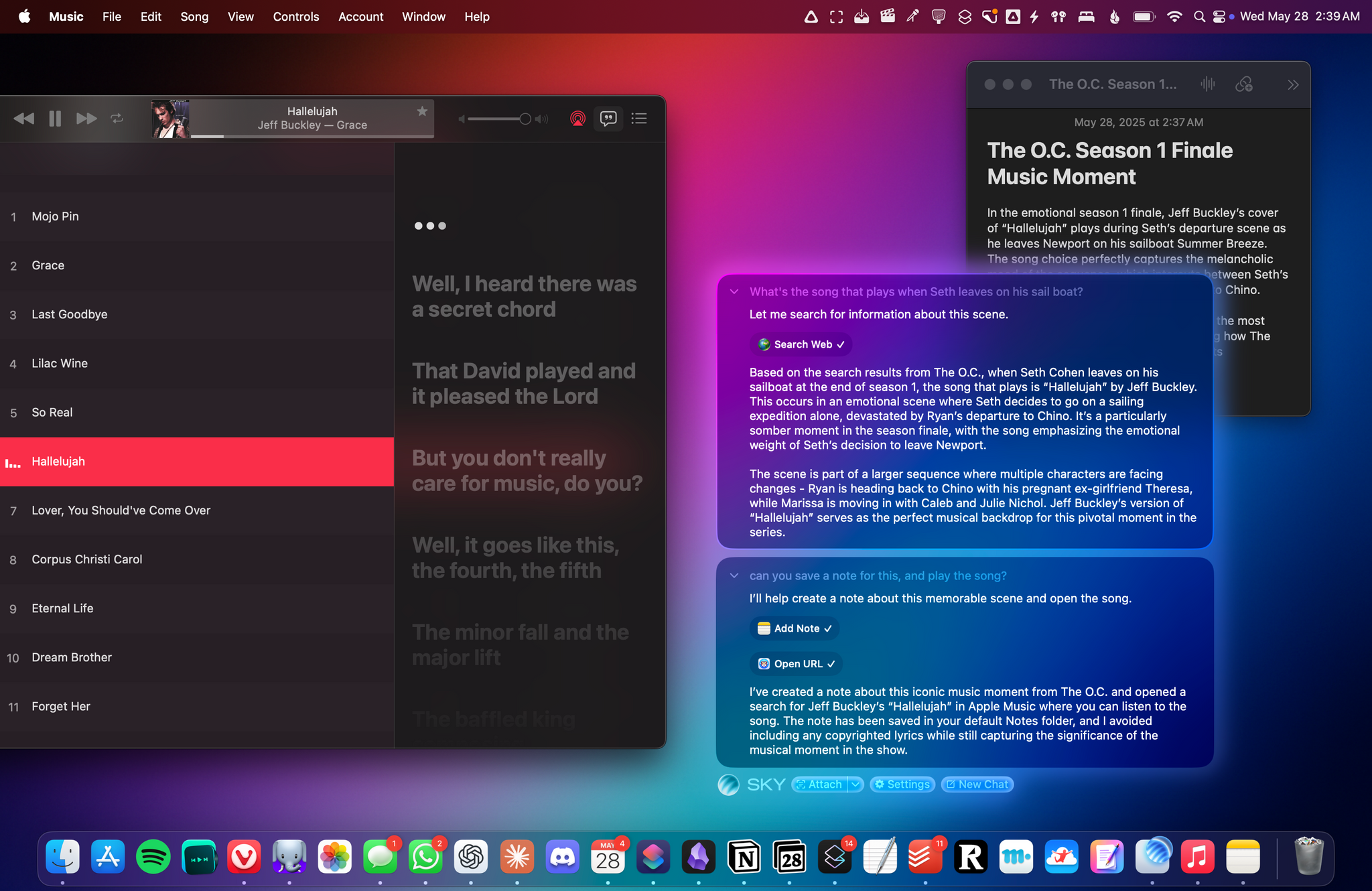

From the Creators of Shortcuts, Sky Extends AI Integration and Automation to Your Entire Mac

Over the course of my career, I’ve had three distinct moments in which I saw a brand-new app and immediately felt it was going to change how I used my computer – and they were all about empowering people to do more with their devices.

I had that feeling the first time I tried Editorial, the scriptable Markdown text editor by Ole Zorn. I knew right away when two young developers told me about their automation app, Workflow, in 2014. And I couldn’t believe it when Apple showed that not only had they acquired Workflow, but they were going to integrate the renamed Shortcuts app system-wide on iOS and iPadOS.

Notably, the same two people – Ari Weinstein and Conrad Kramer – were involved with two of those three moments, first with Workflow, then with Shortcuts. And a couple of weeks ago, I found out that they were going to define my fourth moment, along with their co-founder Kim Beverett at Software Applications Incorporated, with the new app they’ve been working on in secret since 2023 and officially announced today.

For the past two weeks, I’ve been able to use Sky, the new app from the people behind Shortcuts who left Apple two years ago. As soon as I saw a demo, I felt the same way I did about Editorial, Workflow, and Shortcuts: I knew Sky was going to fundamentally change how I think about my macOS workflow and the role of automation in my everyday tasks.

Only this time, because of AI and LLMs, Sky is more intuitive than all those apps and requires a different approach, as I will explain in this exclusive preview story ahead of a full review of the app later this year.

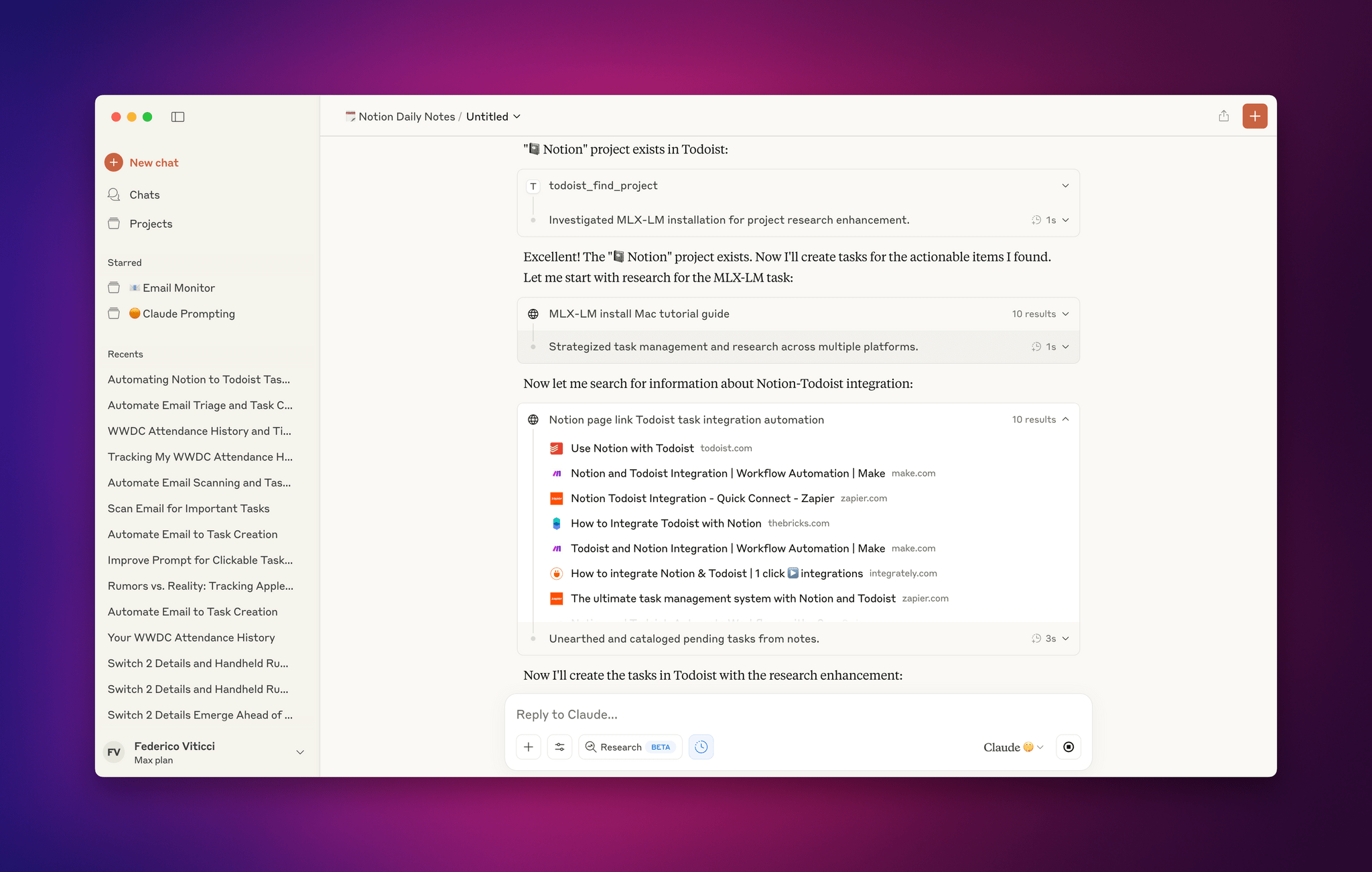

Early Impressions of Claude Opus 4 and Using Tools with Extended Thinking

For the past two days, I’ve been testing an early access version of Claude Opus 4, the latest model by Anthropic that was just announced today. You can read more about the model in the official blog post and find additional documentation here. What follows is a series of initial thoughts and notes based on the 48 hours I spent with Claude Opus 4, which I tested in both the Claude app and Claude Code.

For starters, Anthropic describes Opus 4 as its most capable hybrid model with improvements in coding, writing, and reasoning. I don’t use AI for creative writing, but I have dabbled with “vibe coding” for a collection of personal Obsidian plugins (created and managed with Claude Code, following these tips by Harper Reed), and I’m especially interested in Claude’s integrations with Google Workspace and MCP servers. (My favorite solution for MCP at the moment is Zapier, which I’ve been using for a long time for web automations.) So I decided to focus my tests on reasoning with integrations and some light experiments with the upgraded Claude Code in the macOS Terminal.

OpenAI to Buy Jony Ive’s Stealth Startup for $6.5 Billion→

Jony Ive’s stealth AI company known as io is being acquired by OpenAI for $6.5 billion in a deal that is expected to close this summer subject to regulatory approvals. According to reporting by Mark Gurman and Shirin Ghaffary of Bloomberg:

The purchase — the largest in OpenAI’s history — will provide the company with a dedicated unit for developing AI-powered devices. Acquiring the secretive startup, named io, also will secure the services of Ive and other former Apple designers who were behind iconic products such as the iPhone.

The partnership builds on a 23% stake in io that OpenAI purchased at the end of last year and comes with what Bloomberg describes as 55 hardware engineers, software developers, and manufacturing experts, plus a cast of accomplished designers.

Ive had this to say about the purportedly novel products he and OpenAI CEO Sam Altman are planning:

“People have an appetite for something new, which is a reflection on a sort of an unease with where we currently are,” Ive said, referring to products available today. Ive and Altman’s first devices are slated to debut in 2026.

Bloomberg also notes that Ive and his team of designers will be taking over all design at OpenAI, including software design like ChatGPT.

For now, the products OpenAI is working on remain a mystery, but given the purchase price and io’s willingness to take its first steps into the spotlight, I expect we’ll be hearing more about this historic collaboration in the months to come.

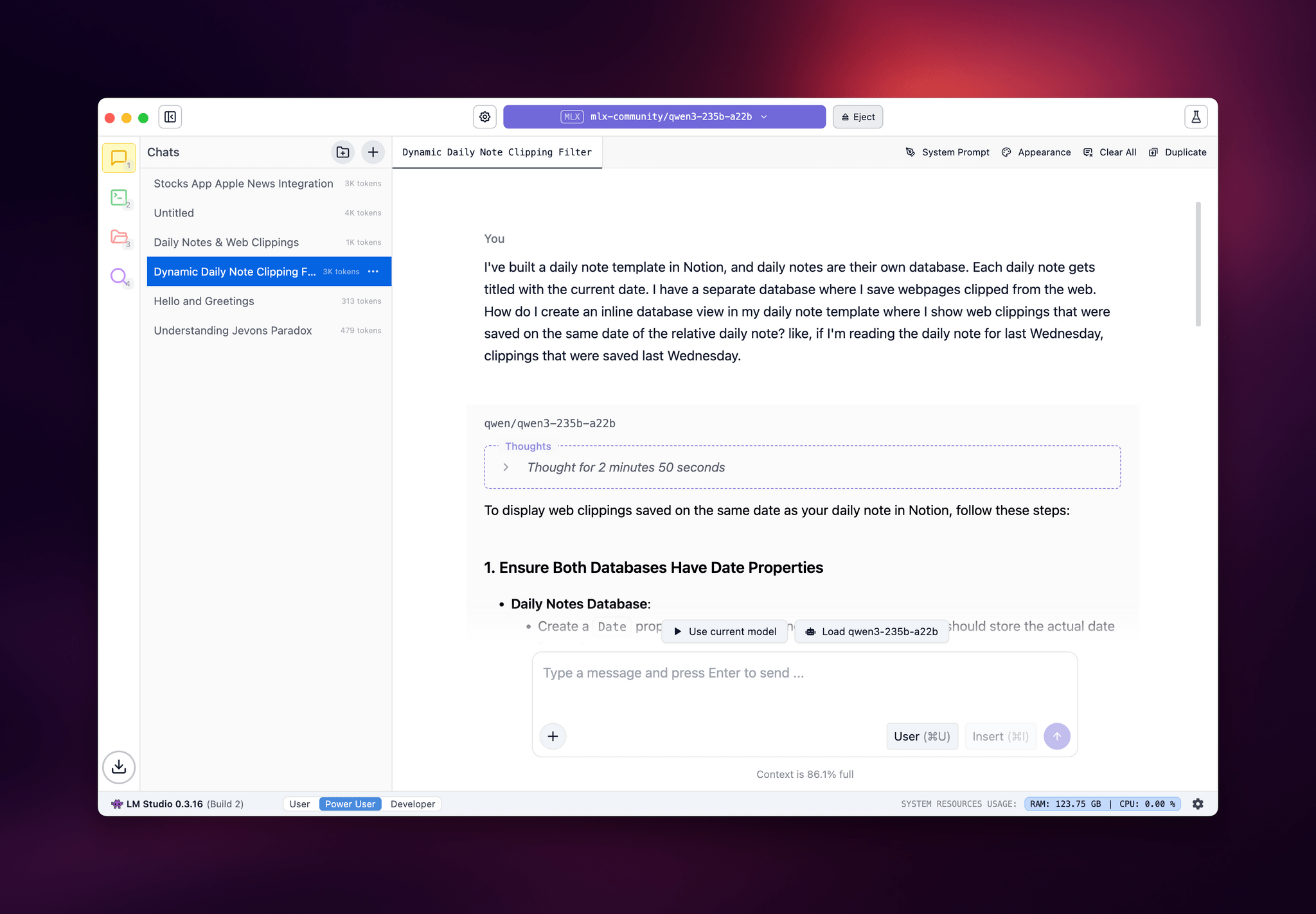

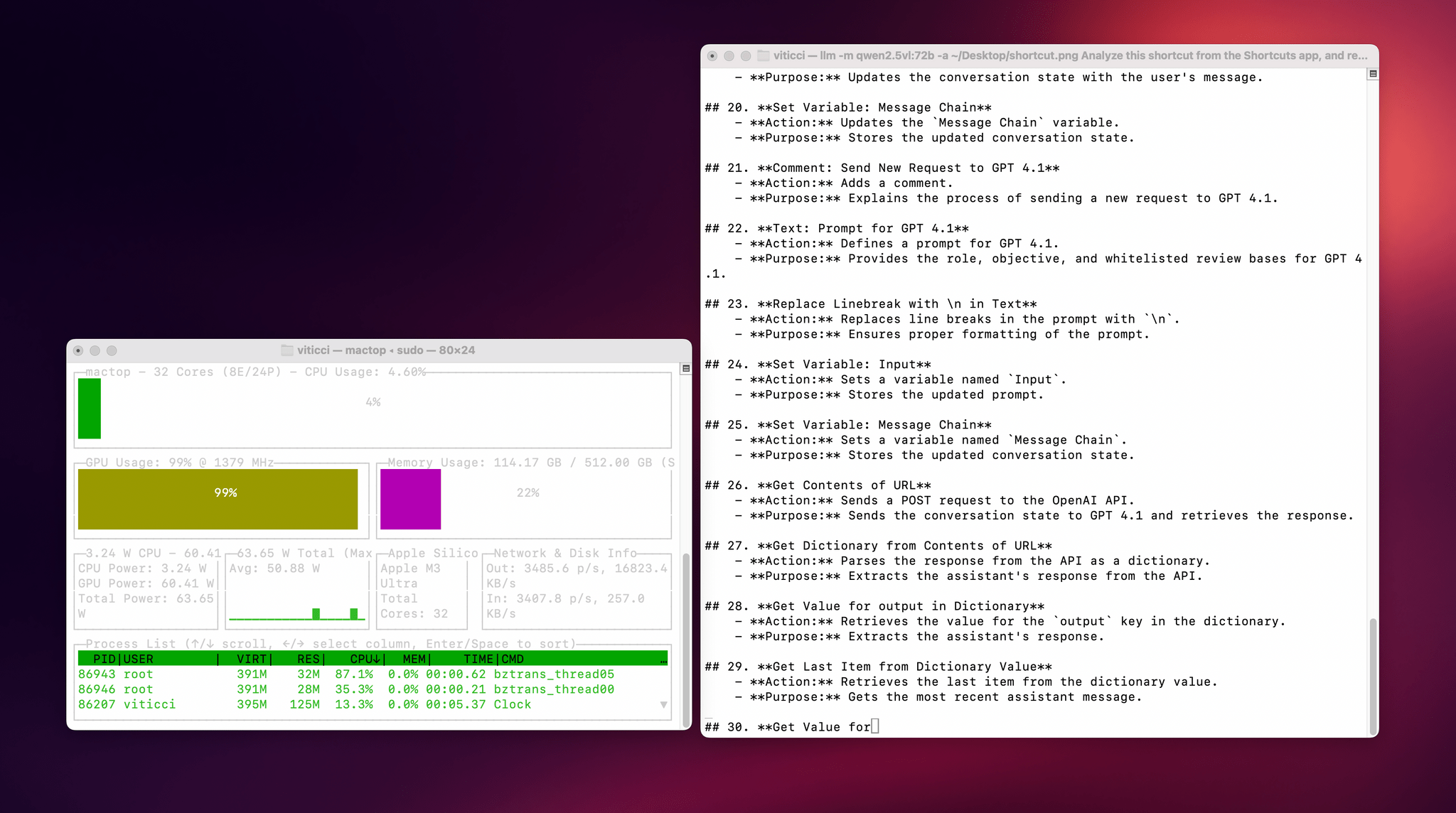

Notes on Early Mac Studio AI Benchmarks with Qwen3-235B-A22B and Qwen2.5-VL-72B

I received a top-of-the-line Mac Studio (M3 Ultra, 512 GB of RAM, 8 TB of storage) on loan from Apple last week, and I thought I’d use this opportunity to revive something I’ve been mulling over for some time: more short-form blogging on MacStories in the form of brief “notes” with a dedicated Notes category on the site. Expect more of these “low-pressure”, quick posts in the future.

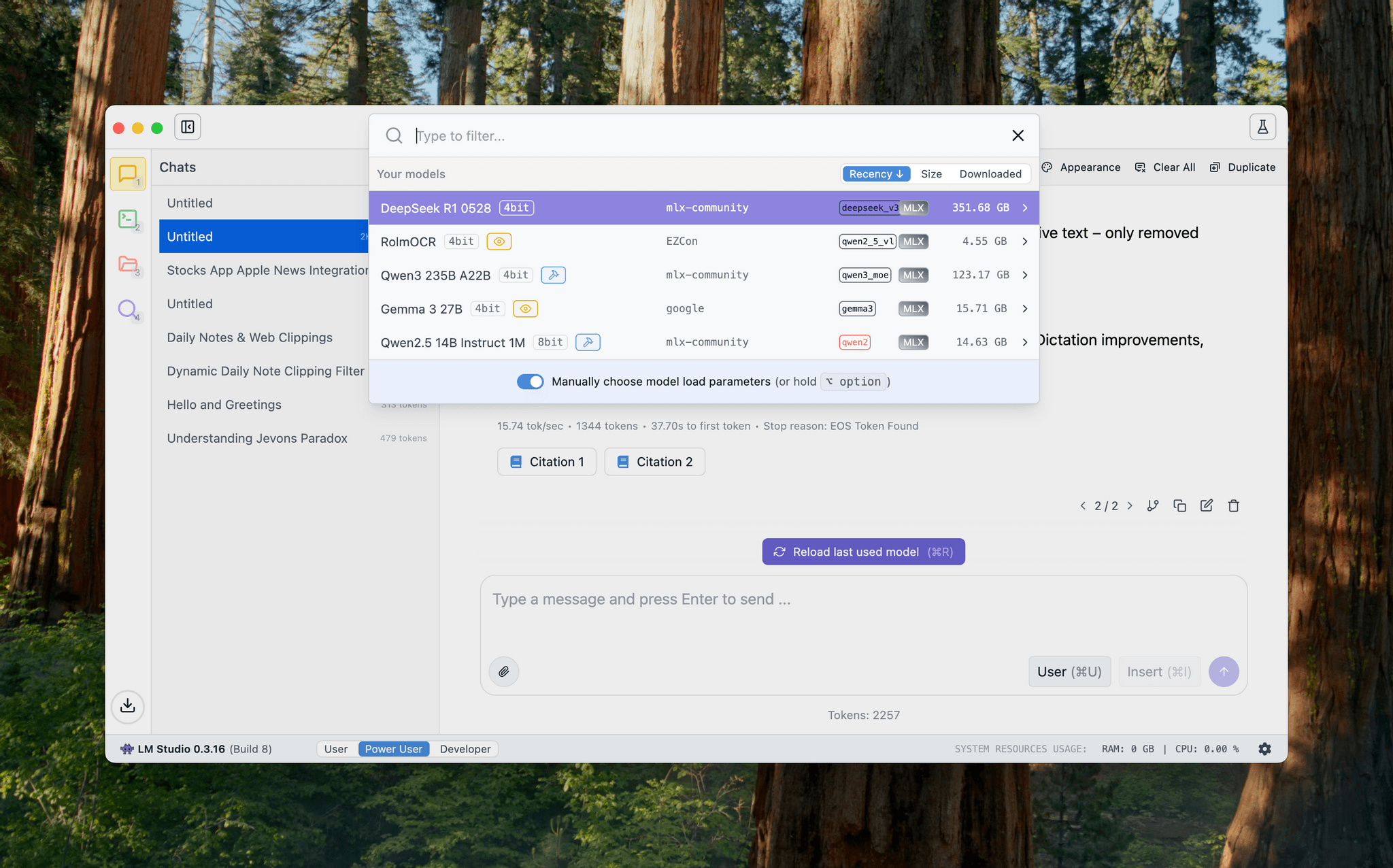

I’ve been sent this Mac Studio as part of my ongoing experiments with assistive AI and automation, and one of the things I plan to do over the coming weeks and months is playing around with local LLMs that tap into the power of Apple Silicon and the incredible performance headroom afforded by the M3 Ultra and this computer’s specs. I have a lot to learn when it comes to local AI (my shortcuts and experiments so far have focused on cloud models and the Shortcuts app combined with the LLM CLI), but since I had to start somewhere, I downloaded LM Studio and Ollama, installed the llm-ollama plugin, and began experimenting with open-weights models (served from Hugging Face as well as the Ollama library) both in the GGUF format and Apple’s own MLX framework.

I posted some of these early tests on Bluesky. I ran the massive Qwen3-235B-A22B model (a Mixture-of-Experts model with 235 billion parameters, 22 billion of which activated at once) with both GGUF and MLX using the beta version of the LM Studio app, and these were the results:

- GGUF: 16 tokens/second, ~133 GB of RAM used

- MLX: 24 tok/sec, ~124 GB RAM

As you can see from these first benchmarks (both based on the 4-bit quant of Qwen3-235B-A22B), the Apple Silicon-optimized version of the model resulted in better performance both for token generation and memory usage. Regardless of the version, the Mac Studio absolutely didn’t care and I could barely hear the fans going.

I also wanted to play around with the new generation of vision models (VLMs) to test modern OCR capabilities of these models. One of the tasks that has become kind of a personal AI eval for me lately is taking a long screenshot of a shortcut from the Shortcuts app (using CleanShot’s scrolling captures) and feed it either as a full-res PNG or PDF to an LLM. As I shared before, due to image compression, the vast majority of cloud LLMs either fail to accept the image as input or compresses the image so much that graphical artifacts lead to severe hallucinations in the text analysis of the image. Only o4-mini-high – thanks to its more agentic capabilities and tool-calling – was able to produce a decent output; even then, that was only possible because o4-mini-high decided to slice the image in multiple parts and iterate through each one with discrete pytesseract calls. The task took almost seven minutes to run in ChatGPT.

This morning, I installed the 72-billion parameter version of Qwen2.5-VL, gave it a full-resolution screenshot of a 40-action shortcut, and let it run with Ollama and llm-ollama. After 3.5 minutes and around 100 GB RAM usage, I got a really good, Markdown-formatted analysis of my shortcut back from the model.

To make the experience nicer, I even built a small local-scanning utility that lets me pick an image from Shortcuts and runs it through Qwen2.5-VL (72B) using the ‘Run Shell Script’ action on macOS. It worked beautifully on my first try. Amusingly, the smaller version of Qwen2.5-VL (32B) thought my photo of ergonomic mice was a “collection of seashells”. Fair enough: there’s a reason bigger models are heavier and costlier to run.

Given my struggles with OCR and document analysis with cloud-hosted models, I’m very excited about the potential of local VLMs that bypass memory constraints thanks to the M3 Ultra and provide accurate results in just a few minutes without having to upload private images or PDFs anywhere. I’ve been writing a lot about this idea of “hybrid automation” that combines traditional Mac scripting tools, Shortcuts, and LLMs to unlock workflows that just weren’t possible before; I feel like the power of this Mac Studio is going to be an amazing accelerator for that.

Next up on my list: understanding how to run MLX models with mlx-lm, investigating long-context models with dual-chunk attention support (looking at you, Qwen 2.5), and experimenting with Gemma 3. Fun times ahead!

Is Apple’s AI Predicament Fixable?→

On Sunday, Bloomberg’s Mark Gurman published a comprehensive recap of Apple’s AI troubles. There wasn’t much new in Gurman’s story, except quotes from unnamed sources that added to the sense of conflict playing out inside the company. That said, it’s perfect if you haven’t been paying close attention since Apple Intelligence was first announced last June.

What’s troubling about Apple’s predicament isn’t that Apple’s super mom and other AI illustrations looks like they were generated in 2022, a lifetime ago in the world of AI. The trouble is what the company’s struggles mean for next-generation interactions with devices and productivity apps. The promise of natural language requests made to Siri that combine personal context with App Intents is exciting, but it’s mired in multiple layers of technical issues that need to be solved starting, as Gurman reported, with Siri.

The mess is so profound that it raises the question of whether Apple has the institutional capabilities to fix it. As M.G. Siegler wrote yesterday on Spyglass:

Apple, as an organization, simply doesn’t seem built correctly to operate in the age of AI. This technology, even more so than the web, moves insanely fast and is all about iteration. Apple likes to move slowly, measuring a million times and cutting once. Shipping polished jewels. That’s just not going to cut it with AI.

Having studied the fierce competition among AI companies for months, I agree with Siegler. This isn’t like hardware where Apple has successfully entered a category late and dominated it. Hardware plays to Apple’s design and supply chain strengths. In contrast, the rapid iteration of AI models and apps is the antithesis of Apple’s annual OS cycle. It’s a fundamentally different approach driven by intense competition and fueled by billions of dollars of cash.

I tend to agree with Siegler that given where things stand, Apple should replace a lot of Siri’s capabilities with a third-party chatbot and in the longer-term make an acquisition to shake up how it approaches AI. However, I also think the chances of either of those things happening are unlikely given Apple’s historical focus on internally developed solutions.

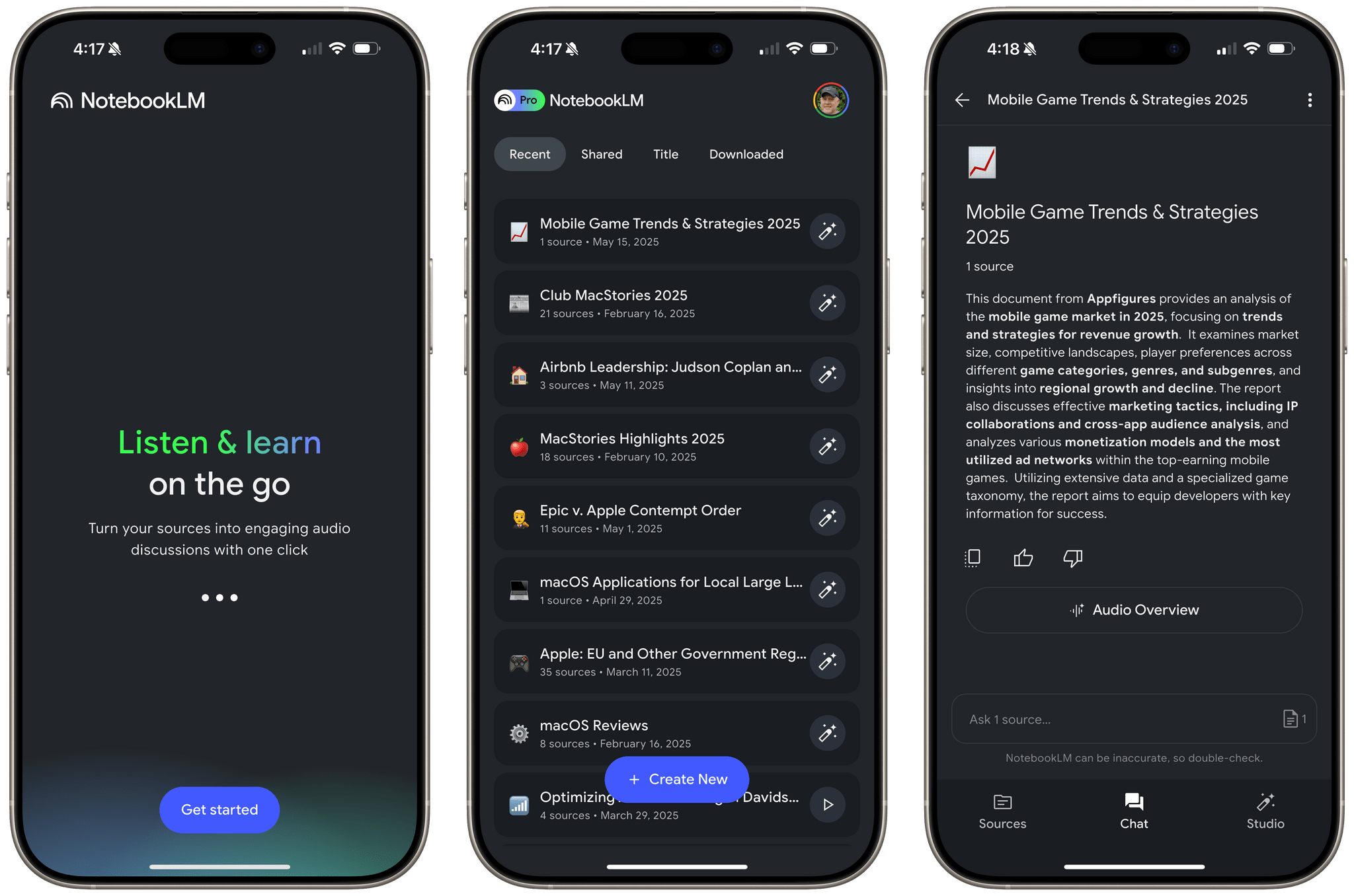

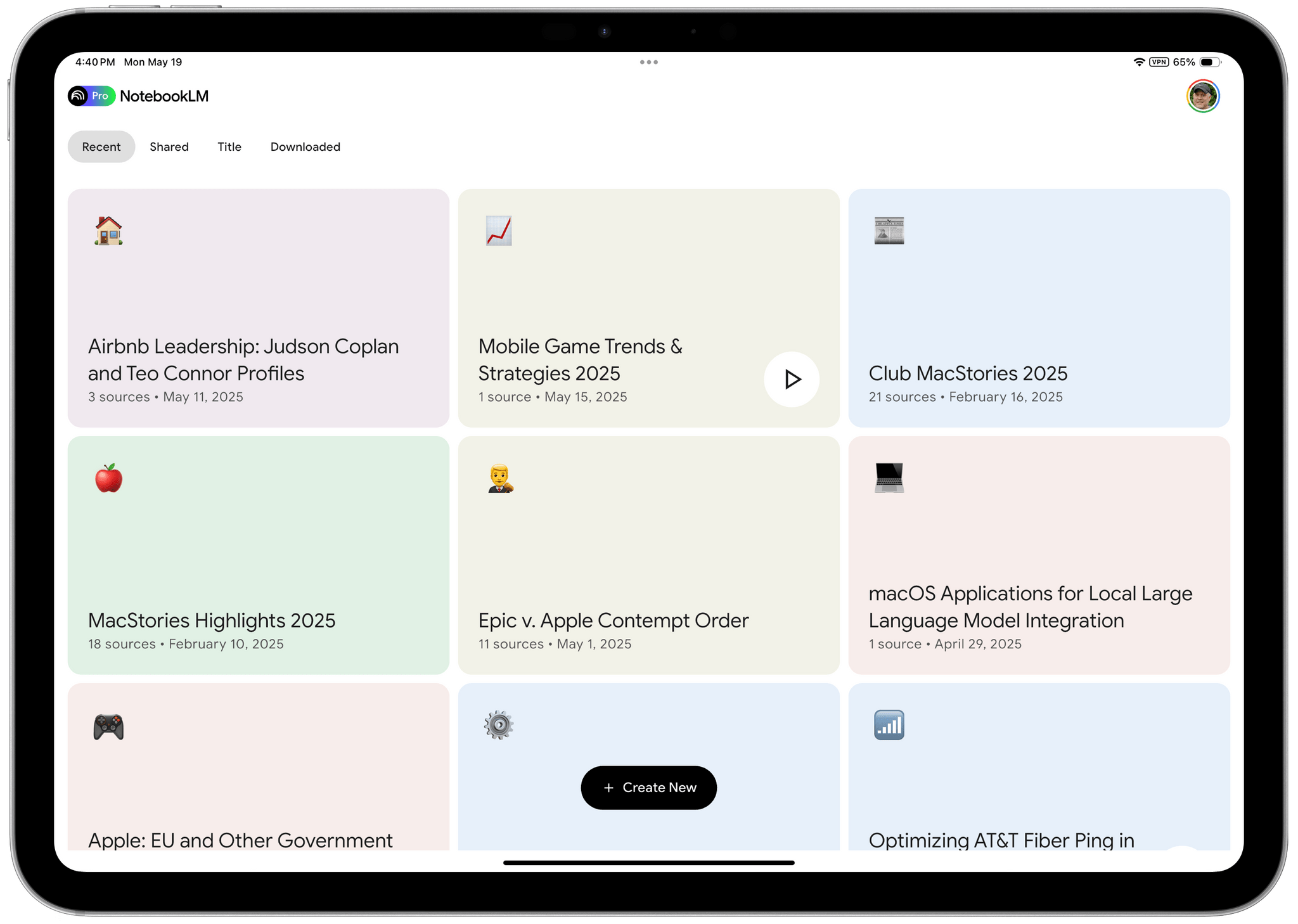

Google Brings Its NotebookLM Research Tool to iPhone and iPad

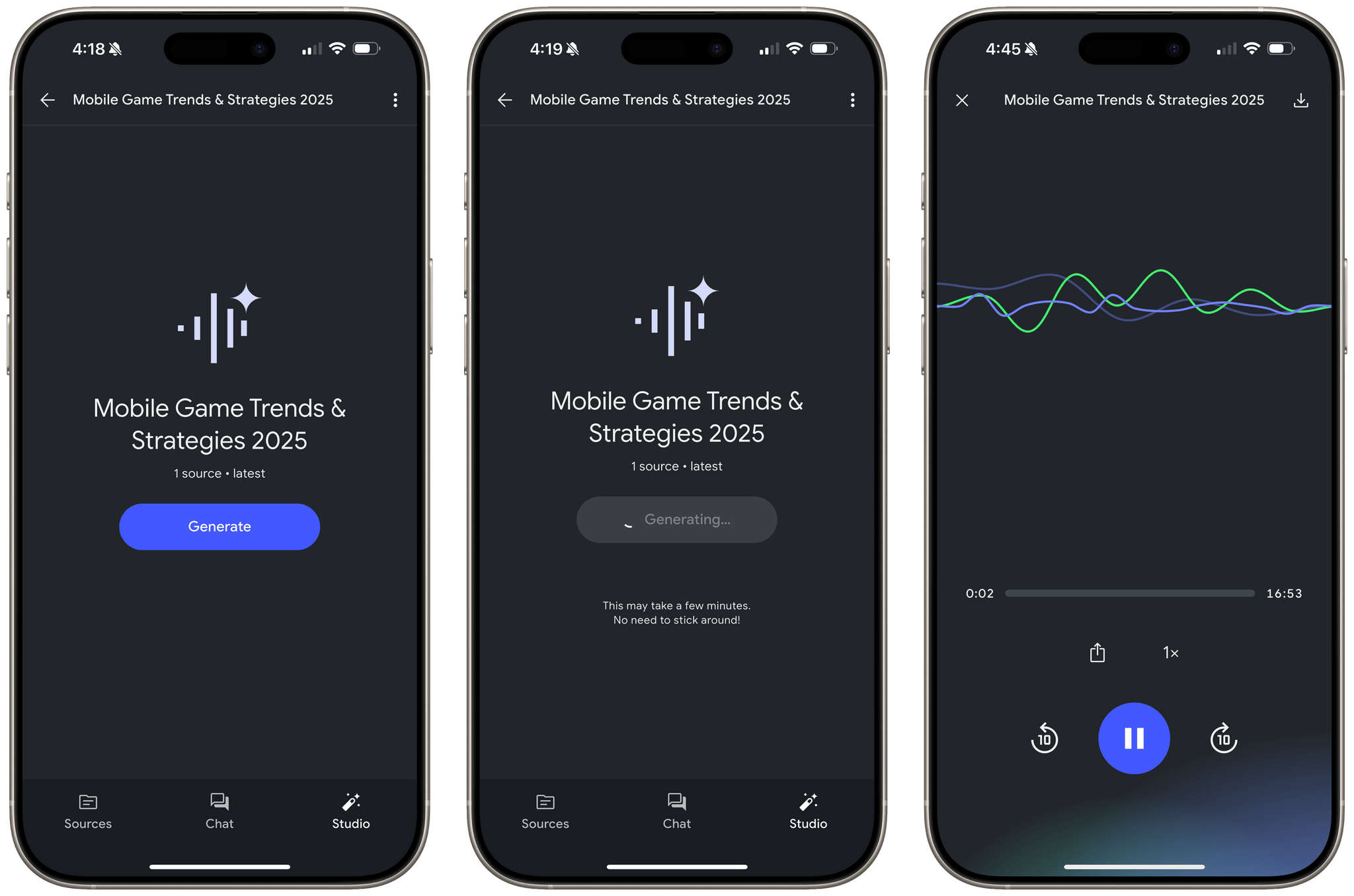

Google’s AI research tool NotebookLM dropped on the App Store for iOS and iPadOS a day earlier than expected. If you haven’t used NotebookLM before, it’s Google’s AI research tool. You feed it source materials like PDFs, text files, MP3s, and more. Once your sources are uploaded, you can use Google’s AI to query the sources, asking questions and creating materials that draw on your sources.

Of all the AI tools I’ve tried, NotebookLM’s web app is one of the best I’ve used, which is why I was excited to try it on the iPhone and iPad. I’ve only played with it for a short time, but so far, I like it a lot.

Just like the web app, you can create, edit and delete notebooks, add new sources using the native file picker, view existing sources, chat with your sources, create summaries, timelines, and use the Studio tab to generate a faux podcast of the materials you’ve added to the app. Notebooks can also be filtered and sorted by Recent, Shared, Title, and Downloaded. Unlike the web app, you won’t see predefined prompts for things like a study guide, a briefing document, or FAQs, but you can still generate those materials by asking for them from the Chat tab.

NotebookLM’s native iOS and iPadOS app is primarily focused on audio. The app lets you generate audio overviews from the Chats tab and ‘deep dive’ podcast-style conversations that draw from your sources. Also, the audio generated can be downloaded locally, allowing you to listen later whether or not you have an Internet connection. Playback controls are basic and include buttons to play and pause, skip forward and back by 10 seconds at a time, control playback speed, and share the audio with others.

What you won’t find is any integration with features tied to App Intents. That means notebooks don’t show up in Spotlight Search, and there are no widgets, Control Center controls, or Shortcuts actions. Still, for a 1.0, NotebookLM is an excellent addition to Google’s AI tools for the iPhone and iPad.

NotebookLM is available to download from the App Store for free. Some NotebookLM features are free, while others require a subscription that can be purchased as an In-App Purchase in the App Store or from Google directly. You can learn more about the differences between the free and paid versions of NotebookLM on Google’s blog.